Create a score

From the application

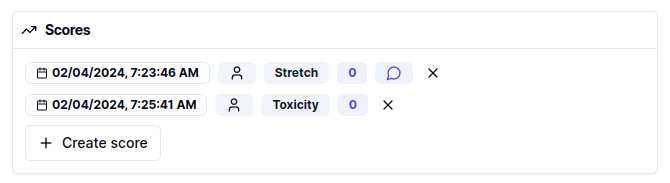

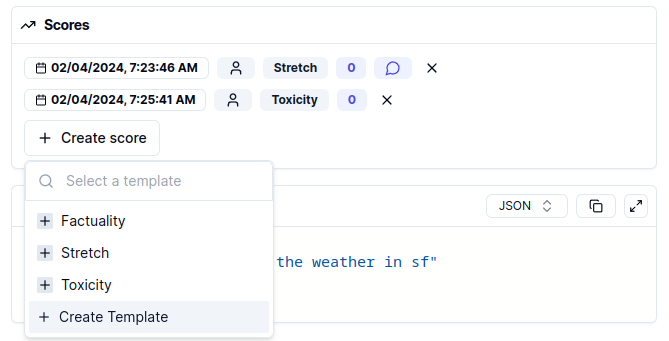

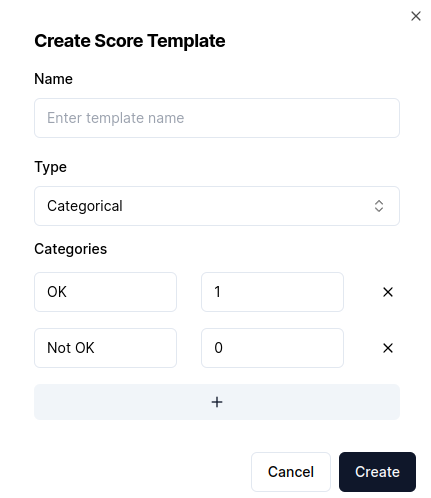

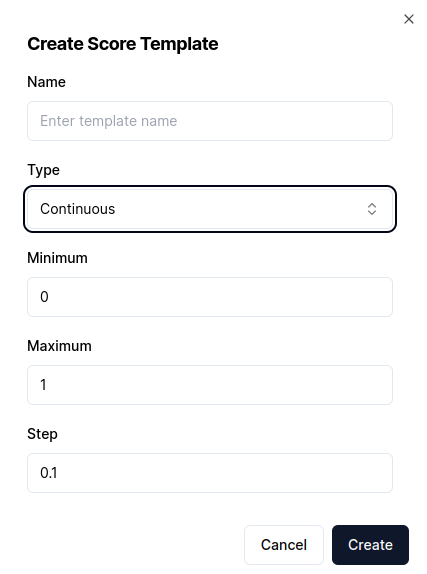

The Literal application offers an easy way to manage scores: Score Templates. To create a Score Template, click on “Create score” and select the “Create Template” option:

Programmatically

The SDKs provide score creation APIs with all fields exposed. Scores must be tied either to a Step or a Generation object.create_score API in Python.

server.py